| site_id | n_pats | n_pdevs | |

|---|---|---|---|

| 0 | site_0 | 9 | 15 |

| 1 | site_1 | 10 | 14 |

| 2 | site_2 | 15 | 18 |

| 3 | site_3 | 9 | 10 |

| 4 | site_4 | 5 | 4 |

Deviations from the approved protocol of a clinical trial are common but need to be reported by investigators to the trial sponsor for review. Failing to do so can put patients at risk and affect the scientific quality of the trial, so detecting protocol deviation underreporting is part of the standard quality assurance activities. Investigator sites that report more deviations than their peers should also raise an alarm as this might indicate underlying quality issues. A statistical tool that quantifies the risk of over- and underreporting of protocol deviations could significantly improve quality activities, much like in the case of adverse event reporting.

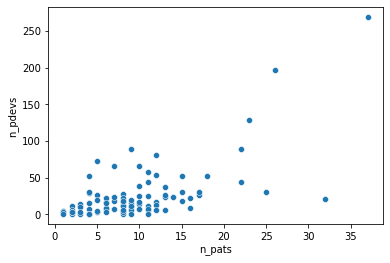

The number of protocol deviations \(n_{pdevs}\) reported by an investigator site should depend linearly on the number of patients \(n_{pats}\) enrolled at that site, as more patients mean more chances of deviations, so these are the minimal attributes that should get collected prior to the analysis. The following table is a sample of the data we will use as an example.

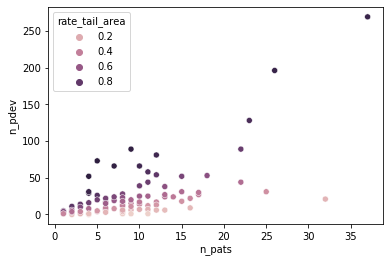

A quick glance at the full dataset reveals there is indeed a relationship that looks linear, so a potential approach would be to build a regression model \(n_{pdevs} \sim \theta \cdot n_{pats}\) and quantify how every observation of \(n_{pdevs}\) deviates from its estimation.

From that scatterplot, it also appears that the residuals of a regression would not be iid. Rather, their variance would grow as the number of patients increases. This rules out least square regression, as it assumes iid normal residuals. Since we are dealing with count data, working with the Poisson distribution is a natural approach,

\[n_{pdevs} \vert n_{pats} \sim Poi(\lambda(n_{pats})),\]

and we can set \(\lambda(n_{pats}) = \theta \cdot n_{pats}\) to reflect our assumption of a linear relationship between \(n_{pdevs}\) and \(n_{pats}\). Note that a regular Poisson regression would fail to capture that linear relationship due to its exponential link function.

In this model, we immediately have \(E\left[n_{pdevs} \vert n_{pats}\right] = Var\left[n_{pdevs} \vert n_{pats}\right] = \theta \cdot n_{pats}\), which seems to reproduce the increasing spread of \(n_{pdevs}\).

We can infer the value of \(\theta\) through maximum likelihood estimation and use the resulting conditional Poisson model at each site to compute the cumulative distribution function (CDF) of the observed numbers of protocol deviations.

Code

def loss(par, n_pat, n_dev):

theta = tf.math.exp(par[0])

dist = tfd.Poisson(n_pat * theta)

return -tf.reduce_sum(dist.log_prob(n_dev))

def compute_cdf(par, n_pat, n_dev):

theta = tf.math.exp(par[0])

dist = tfd.Poisson(n_pat * theta)

return dist.cdf(n_dev)

@tf.function

def loss_and_gradient(par, n_pat, n_dev):

return tfp.math.value_and_gradient(lambda par: loss(par, n_pat, n_dev), par)

def fit(n_pat, n_dev):

init = 2*tf.ones(1)

opt = tfp.optimizer.lbfgs_minimize(

lambda par: loss_and_gradient(par, n_pat, n_dev), init, max_iterations=1000

)

return opt

n_pats = tf.constant(data['n_pats'], dtype=tf.float32)

n_pdev = tf.constant(data['n_pdevs'], dtype=tf.float32)

mle = fit(n_pats, n_pdev)

#print(f"converged: {mle.converged}")

#print(f"iterations: {mle.num_iterations}")

x = np.linspace(0, 40)

par = mle.position

y = np.exp(par[0]) * x

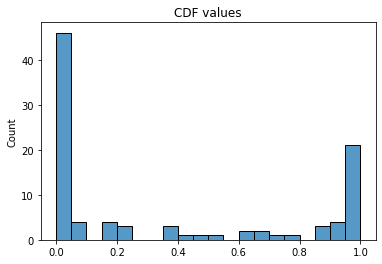

cdfs = compute_cdf(par, n_pats, n_pdev)These CDF values are concentrated around 0 and 1, which makes this approach quite impractical and suggests that the variance of the model is lower than the variance of the data.

The low variance can be increased by treating \(\lambda(n_{pats})\) as a random function, rather than a deterministic one. So we assume that \(\lambda(n_{pats})\) is drawn from a gamma distribution, \(\lambda(n_{pats}) \sim \Gamma(\alpha, \beta)\), where the rate parameter \(\beta\) is inversely proportional to the expected number of protocol deviations from a given site, \(\beta = \beta_{pat} / n_{pats}\), in order to ensure linearity in \(n_{pats}\). In this context, maximum likelihood estimation would be a nightmare to implement (because of the rate parameters of the Poisson distribution) and probably not very stable, so it is best to turn to Bayesian inference via MCMC algorithms. We thus pick gamma priors for \(\alpha\) and \(\beta_{pat}\) with a shape parameters of 2 to prevent the corresponding Markov chains from drifting too close to zero, where pathological behaviors seem to occur with more permissive priors in this model.

Code

sites = tf.constant(data['site_id'])

n_pats = tf.constant(data['n_pats'], dtype=tf.float32)

n_pdev = tf.constant(data['n_pdevs'], dtype=tf.float32)

mdl_pd = tfd.JointDistributionSequential([

#alpha

tfd.Gamma(2, 2, name='alpha'),

#beta_pt

tfd.Gamma(2, 2, name='beta_pt'),

#pdev rates for each patient

lambda beta_pt, alpha: tfd.Independent(

tfd.Gamma(alpha[...,tf.newaxis], beta_pt[...,tf.newaxis] / n_pats[tf.newaxis,...]),

reinterpreted_batch_ndims=1

),

#observed pdevs

lambda rates: tfd.Independent(tfd.Poisson(rates), reinterpreted_batch_ndims=1)

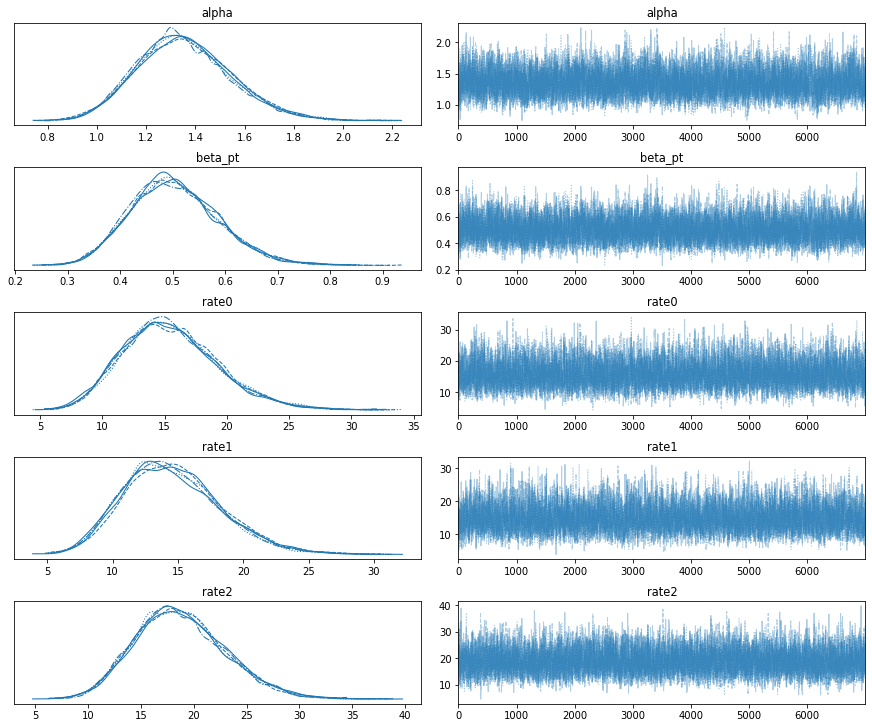

])We can sample the posterior distribution of this model with a Hamiltonian Monte Carlo algorithm and assess the convergence of the Markov chains before computing the posterior probabilities of interest.

Code

dtype = tf.dtypes.float32

nchain = 5

burnin=3000

num_steps=10000

alpha0, beta_pt0, rates0, _ = mdl_pd.sample(nchain)

init_state = [alpha0, beta_pt0, rates0]

step_size = [tf.cast(i, dtype=dtype) for i in [0.01, 0.01, 0.01]]

target_log_prob_fn = lambda *init_state: mdl_pd.log_prob(

list(init_state) + [tf.cast(n_pdev, dtype=dtype)])

unconstraining_bijectors = 3*[tfb.Exp()]

@tf.function(autograph=False, experimental_compile=True)

def run_chain(init_state, step_size, target_log_prob_fn, unconstraining_bijectors,

num_steps=num_steps, burnin=burnin):

def trace_fn(_, pkr):

return (

pkr.inner_results.inner_results.is_accepted

)

kernel = tfp.mcmc.TransformedTransitionKernel(

inner_kernel=tfp.mcmc.HamiltonianMonteCarlo(

target_log_prob_fn,

num_leapfrog_steps=3,

step_size=step_size),

bijector=unconstraining_bijectors)

hmc = tfp.mcmc.SimpleStepSizeAdaptation(

inner_kernel=kernel,

num_adaptation_steps=burnin

)

# Sampling from the chain.

[alpha, beta_pt, rates], is_accepted = tfp.mcmc.sample_chain(

num_results=num_steps,

num_burnin_steps=burnin,

current_state=init_state,

kernel=hmc,

trace_fn=trace_fn)

return alpha, beta_pt, rates, is_accepted

alpha, beta_pt, rates, is_accepted = run_chain(

init_state, step_size, target_log_prob_fn, unconstraining_bijectors)

alpha_ = alpha[burnin:,:]

alpha_ = tf.reshape(alpha_, [alpha_.shape[0]*alpha_.shape[1]])

beta_pt_ = beta_pt[burnin:,:]

beta_pt_ = tf.reshape(beta_pt_, [beta_pt_.shape[0]*beta_pt_.shape[1]])

rates_ = rates[burnin:,:]

rates_ = tf.reshape(rates_, [rates_.shape[0]*rates_.shape[1], rates_.shape[2]])

rates_dist_ = tfd.Gamma(alpha_[:,tf.newaxis], beta_pt_[:, tf.newaxis] / n_pats[tf.newaxis,...])

rates_cdf_ = rates_dist_.cdf(rates_)

posterior = {}

posterior['alpha'] = tf.transpose(alpha[burnin:, :]).numpy()

posterior['beta_pt'] = tf.transpose(beta_pt[burnin:, :]).numpy()

posterior['rate0'] = tf.transpose(rates[burnin:, :, 0])

posterior['rate1'] = tf.transpose(rates[burnin:, :, 1])

posterior['rate2'] = tf.transpose(rates[burnin:, :, 2])

az_trace = az.from_dict(posterior=posterior)

print(f'MCMC acceptance rate: {is_accepted.numpy().mean()}')

az.plot_trace(az_trace)

plt.show()MCMC acceptance rate: 0.73832

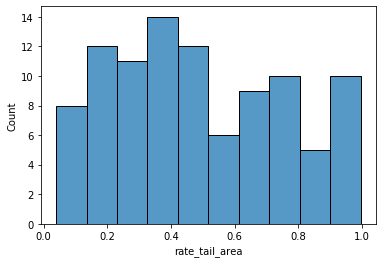

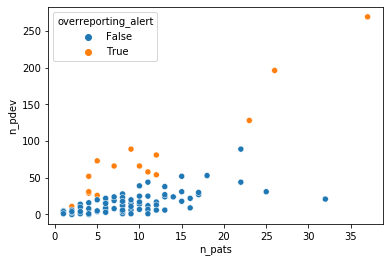

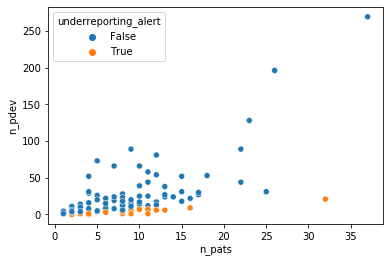

Given a Markov chain sample \((\hat\alpha, \hat\beta_{pat}, (\hat\lambda_i)_{i=1,\dots,N})\), where \(i\) indexes the investigator sites, we can evaluate the CDF of \(\Gamma(\hat\alpha, \hat\beta_{pat} / n_{pats, i})\) at \(\hat\lambda_i\) and average these quantities along the whole Markov chain to obtain an indicator of over- and underreporting. This indicator corresponds to the rate tail area of the inferred Poisson rates under their posterior predictive distribution. Low values mean a risk of underreporting, and high values a risk of overreporting (see the last column of the following sample table).

| site | n_pats | n_pdev | mean_pdev_rate | std_pdev_rate | rate_tail_area | |

|---|---|---|---|---|---|---|

| 0 | site_0 | 9 | 15 | 15.51 | 3.82 | 0.42 |

| 1 | site_1 | 10 | 14 | 14.56 | 3.72 | 0.36 |

| 2 | site_2 | 15 | 18 | 18.73 | 4.28 | 0.31 |

| 3 | site_3 | 9 | 10 | 10.77 | 3.19 | 0.30 |

| 4 | site_4 | 5 | 4 | 4.86 | 2.08 | 0.24 |

| 5 | site_5 | 7 | 19 | 18.98 | 4.26 | 0.61 |

| 6 | site_6 | 25 | 31 | 31.74 | 5.59 | 0.32 |

| 7 | site_7 | 2 | 11 | 9.82 | 2.83 | 0.82 |

| 8 | site_8 | 6 | 7 | 7.75 | 2.71 | 0.32 |

| 9 | site_9 | 17 | 27 | 27.65 | 5.17 | 0.40 |

The distribution of the rate tail areas looks more convenient than in the first simple model. Not only did we add variance with a mixture model, but we also assess the inferred Poisson parameters rather than the observations, and the former are shrunk by their prior.

This metric also seems to agree with the intuition of what underreporting and overreporting should look like.

We can set thresholds for over- and underreporting alerts at .8 and .2 respectively to illustrate how an auditor could use this model to select investigator sites to focus on.

This method illustrates the potential of Bayesian modeling to supercharge a regression analysis toolbox with a variety of likelihood functions that can capture the intricacies of the data generating process and inference methods that are more flexible in quantifying uncertainties than the standard GLM methods. These properties are particularly helpful in practical tasks such as anomaly detection or risk management that combine expert insights with quantitative modeling.