import tensorflow as tf

import tensorflow_probability as tfp

tfb = tfp.bijectors

tfd = tfp.distributions

sites = tf.constant(data['site_number'])

observed_ae = tf.constant(data['ae_count_cumulative'])

unique_sites, sites_idx, sites_counts = tf.unique_with_counts(sites)

mdl_ae = tfd.JointDistributionSequential([

#alpha

tfd.Gamma(1, 1, name='alpha'),

#beta

tfd.Gamma(1, 10, name='beta'),

#Poisson rates for all sites

lambda beta, alpha: tfd.Sample(tfd.Gamma(alpha, beta),

sample_shape=unique_sites.shape,

name='rates'),

#observed AEs

lambda rates: tfd.Independent(

tfd.Poisson(tf.gather(rates, sites_idx, axis=-1)),

reinterpreted_batch_ndims=1,

name='observations')

])This post presents a practical application of probabilistic programming and explores some technical details of a recent preprint where Timothé Ménard and I proposed a Bayesian model for the detection of adverse event underreporting in clinical trials.

A clinical trial is usually run across several investigator sites, which are required to report to the trial sponsor adverse events experienced by enrolled subjects. This is necessary to assess the safety of the intervention under investigation. Underreporting from certain sites has been a recurrent issue. Trial sponsors and health authorities rely on audits and inspections to ensure completeness of the collected safety data. This effort can and should be informed by statistical analysis of the adverse event reporting process with a focus on identifying sites that report at lower rates.

The main hurdle is that you are looking for missing data that you do not know is missing. We developed a solution around an observational model for adverse event reporting and a way to characterizing outlying investigator sites.

The relevant data is the count of adverse events reported by every patient enrolled in a study and their site assignment. To illustrate our methodology, we use data from the control arm of NCT00617669, an oncology study from Project Data Sphere.

Bayesian data analysis combines expert knowledge on the process of interest, expressed as a probabilistic model, with observed data to infer posterior distributions of the model parameters. These posterior distributions allow us to quantify uncertainties and risks, and to compute posterior expectation values of quantities of interest.

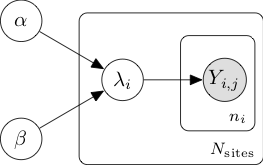

Hierarchical models provide a natural framework for subgroup analysis, where similar entities such as the sites of a single study can share information while maintaining a certain degree of independence. In our situation, at the bottom of the hierarchy, the count of adverse events reported by the \(n_i\) patients of site \(i\) can be modelled with a Poisson distribution, \(Y_i \sim \mathrm{Poi}(\lambda_i)\). The \(N_{\mathrm{sites}}\) Poisson rates \(\lambda_i\) can in turn be modelled as realizations of a random variable unique to the whole study with Gamma distribution \(\Gamma(\alpha, \beta)\). The parameters \(\alpha\) and \(\beta\) are unknown, so we assume a vague prior for both of them, \(\alpha \sim \mathrm{Exp}(1)\) and \(\beta \sim \mathrm{Exp}(10)\). The full joint distribution \[ P(\alpha, \beta, \lambda_i, Y_{i,j}) = P(\alpha)P(\beta)\prod_{i=1}^{N_{\mathrm{sites}}}P(\lambda_i\vert\alpha,\beta)\prod_{j=1}^{n_i} P(Y_{i,j}\vert \lambda_i) \] is summarized in the following graphical representation.

Numerical modelling of such joint distributions is made easy by probabilistic programming libraries such as TensorFlow-Probability (utilized here), Stan, PyMC3 or Pyro.

In Bayesian inference, the analytical derivation of the posterior distribution is often impossible, but the same probabilistic programming libraries provide efficient implementations of MCMC algorithms that return samples of the posterior distribution.

Code

dtype = tf.dtypes.float32

nchain = 10

burnin=1000

num_steps=10000

alpha0, beta0, rates0, _ = mdl_ae.sample(nchain)

init_state = [alpha0, beta0, rates0]

step_size = [tf.cast(i, dtype=dtype) for i in [.1, .1, .1]]

target_log_prob_fn = lambda *init_state: mdl_ae.log_prob(

list(init_state) + [tf.cast(observed_ae, dtype=dtype)])

# bijector to map contrained parameters to real

unconstraining_bijectors = [

tfb.Exp(),

tfb.Exp(),

tfb.Exp()

]

@tf.function(autograph=False, experimental_compile=True)

def run_chain(init_state, step_size, target_log_prob_fn, unconstraining_bijectors,

num_steps=num_steps, burnin=burnin):

def trace_fn(_, pkr):

return (

pkr.inner_results.inner_results.is_accepted

)

kernel = tfp.mcmc.TransformedTransitionKernel(

inner_kernel=tfp.mcmc.HamiltonianMonteCarlo(

target_log_prob_fn,

num_leapfrog_steps=3,

step_size=step_size),

bijector=unconstraining_bijectors)

hmc = tfp.mcmc.SimpleStepSizeAdaptation(

inner_kernel=kernel,

num_adaptation_steps=burnin

)

# Sampling from the chain.

[alpha, beta, rates], is_accepted = tfp.mcmc.sample_chain(

num_results=num_steps,

num_burnin_steps=burnin,

current_state=init_state,

kernel=hmc,

trace_fn=trace_fn)

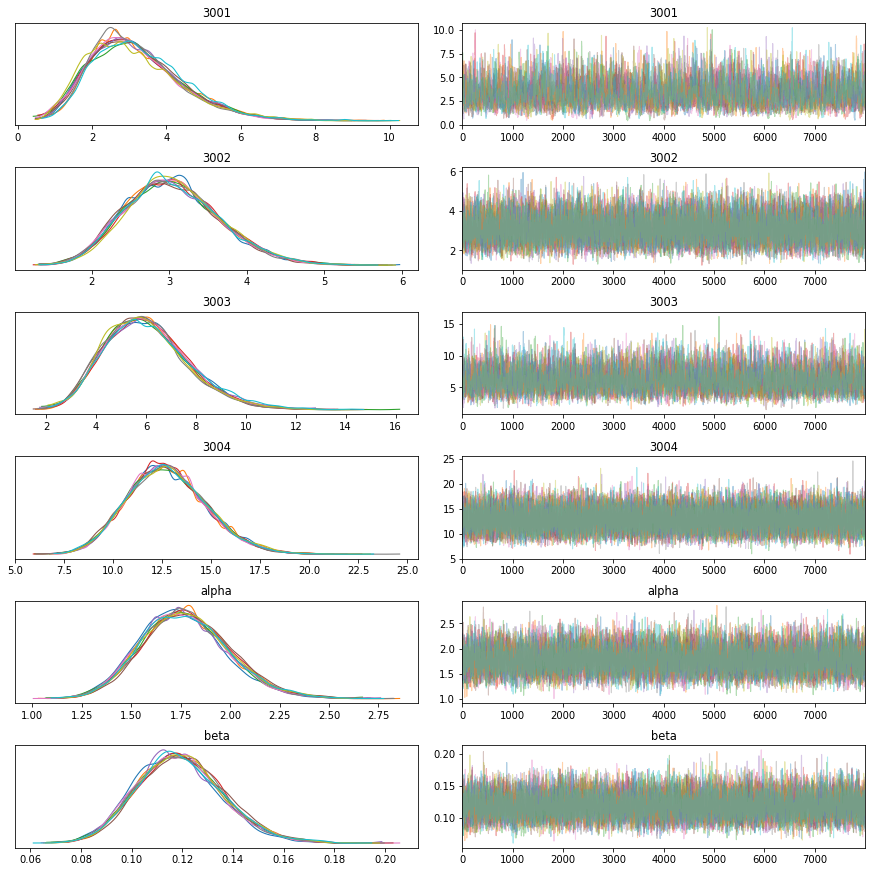

return alpha, beta, rates, is_acceptedTo assess convergence, we sample several chains that we can inspect visually (here with the ArviZ package) to make sure that they converge to the same distribution, mix well, and do not display pathological autocorrelations.

If we have to monitor several studies, we might want to automate that process. In that case, we can compute statistics of the sampled chains such as effective sample sizes or \(\hat{R}\) and implement automatic checks, for instance that \(\hat{R}\) is sufficiently close to 1.

| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_mean | ess_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 3001 | 3.193 | 1.246 | 1.081 | 5.565 | 0.022 | 0.016 | 3146.0 | 3146.0 | 2849.0 | 3764.0 | 1.0 |

| 3002 | 3.050 | 0.616 | 1.964 | 4.250 | 0.006 | 0.004 | 11787.0 | 11787.0 | 11502.0 | 17056.0 | 1.0 |

| 3003 | 6.043 | 1.695 | 3.084 | 9.280 | 0.022 | 0.015 | 6031.0 | 6031.0 | 5772.0 | 9765.0 | 1.0 |

| 3004 | 12.764 | 2.030 | 9.037 | 16.628 | 0.014 | 0.010 | 19819.0 | 19819.0 | 19489.0 | 28664.0 | 1.0 |

| alpha | 1.774 | 0.223 | 1.364 | 2.192 | 0.003 | 0.002 | 6352.0 | 6352.0 | 6253.0 | 16128.0 | 1.0 |

| beta | 0.119 | 0.017 | 0.087 | 0.151 | 0.000 | 0.000 | 7576.0 | 7576.0 | 7441.0 | 18742.0 | 1.0 |

From the samples \((\hat{\alpha}, \hat{\beta}, \hat{\lambda}_i)\) of the Markov chain, we can estimate the posterior risk of underreporting. One way to do it is to compute the left tail area of each \(\lambda_i\) (remember that the index \(i\) enumerates the sites) under the distribution \(\Gamma(\hat{\alpha}, \hat{\beta})\) and average it along the trace of the Markov chain. This corresponds to the probability that a Poisson rate drawn randomly from the study level distribution falls below the inferred Poisson rate of site \(i\), or, more explicitly, that a reference site from the same study would report less adverse events.

| site | mean_ae_rate | std_ae_rate | rate_tail_area | observed_ae | |

|---|---|---|---|---|---|

| 0 | 3001 | 3.20 | 1.25 | 0.09 | [4, 1] |

| 1 | 3002 | 3.05 | 0.62 | 0.08 | [2, 2, 1, 2, 5, 5, 5, 1] |

| 2 | 3003 | 6.04 | 1.69 | 0.22 | [7, 4] |

| 3 | 3004 | 12.76 | 2.03 | 0.52 | [3, 27, 8] |

| 4 | 3005 | 9.35 | 2.10 | 0.37 | [12, 6] |

| 5 | 3006 | 3.69 | 1.32 | 0.11 | [2, 4] |

| 6 | 3007 | 6.03 | 2.31 | 0.22 | [5] |

| 7 | 3008 | 17.95 | 1.72 | 0.69 | [11, 4, 16, 31, 23, 23] |

| 8 | 3009 | 6.52 | 1.76 | 0.24 | [6, 6] |

| 9 | 3010 | 14.71 | 0.92 | 0.59 | [21, 10, 6, 17, 10, 7, 26, 19, 18, 1, 18, 23, ... |

A lower value of this rate tail area thus indicates a higher risk of underreporting. This metric can be used by auditors and inspectors to prioritize their activities. Moreover, since it is the probability of a specified event, one can immediately compare the rate tail areas of sites from different studies. This is especially interesting for quality programs that oversee several trials.

This approach demonstrates the flexibility of Bayesian methods to build models that answer specific questions about a given process. In this example, the reporting rates of the different sites are the quantities of interest, but they are unobserved and have to be inferred from the available data with a mathematical model. The user-friendly API of modern probabilistic programming libraries combined with efficient inference algorithms have been making this type of workflow much easier than in the past and will certainly fuel a broader adoption in sectors that have not been traditionally driven by quantitative insights.