Note: This is a second version of the article, edited on 2022-11-09, with a better method to reject outliers suggested by Isaac Skog.

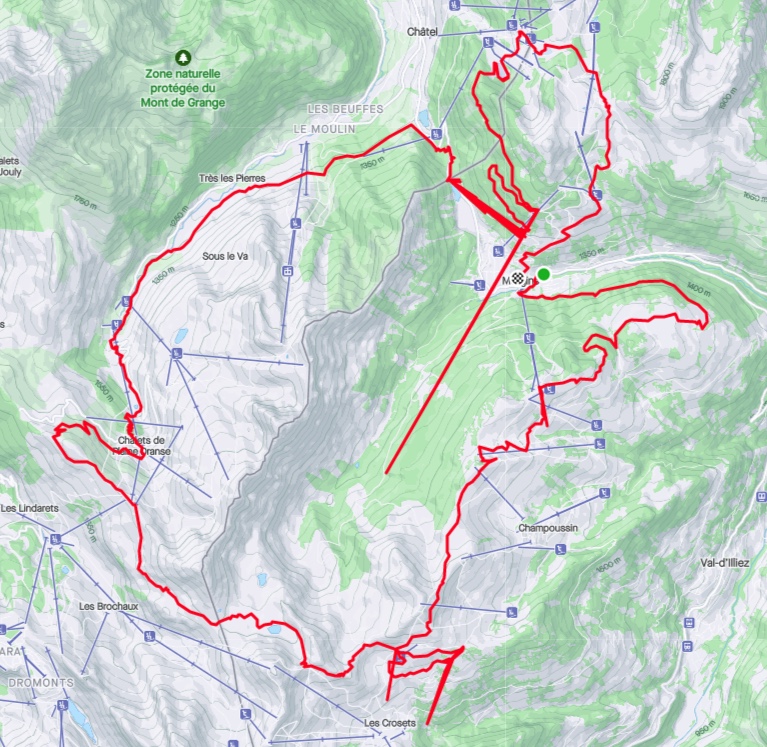

Like many cyclists and mountain bikers, I record and share my rides with Strava. When I use my electric mountain bike, I normally export the relevant data to Strava from the Bosch eBike Flow app that controls and monitors the performance of the motor and gets geolocation data from the mobile phone. I noticed that this data is sometimes affected by measurement errors that look like weird spikes on my routes. This is visible on this screenshot of a ride I did in Morgins last summer.

To the data scientist in me who likes to correlate how much he rides week on week with the amount of ice cream he eats, this is frustrating because these errors are reflected in the total distances and elevation gains.

According to my satellite navigation guru friend Yannick, this can happen when the signal from a satellite bounces on cliffs before reaching the receiver, and I assume that the processing software puts strong priors on roads and trails that lead to funky corrections. For instance, the border crossing of Pas de Morgins seems to attract quite a lot of points from the nearby hillside.

Measurement errors can be somewhat corrected with signal processing techniques, but first we need to get the data. Strava allows you to export gpx files of your rides, which can be read with the gpxpy library. The result is a time series of latitude, longitude and elevation measurements collected every second (except when the motor automatically turns off when I spend too much time eating snacks or petting cows and alpacas), and as a preprocessing step we can normalize them.

Code

with open('my_data/Morgins_roundtrip.gpx') as gpx_file:

gpx = gpxpy.parse(gpx_file)

route_info = []

for track in gpx.tracks:

for segment in track.segments:

for point in segment.points:

route_info.append({

'time': point.time,

'latitude': point.latitude,

'longitude': point.longitude,

'elevation': point.elevation

})

def normalize(series):

return (series - series[0])/series.std()

def unnormalize(normalized_series, ref_series):

return normalized_series * ref_series.std() + ref_series[0]

route_df = (pd.DataFrame(route_info)

.assign(norm_lat=lambda x: normalize(x.latitude))

.assign(norm_lon=lambda x: normalize(x.longitude))

.assign(norm_elev=lambda x: normalize(x.elevation))

)

route_df.head()| time | latitude | longitude | elevation | norm_lat | norm_lon | norm_elev | |

|---|---|---|---|---|---|---|---|

| 0 | 2022-07-03 10:12:15+00:00 | 46.238541 | 6.861996 | 1302.3 | 0.000000 | 0.000000 | 0.000000 |

| 1 | 2022-07-03 10:12:16+00:00 | 46.238541 | 6.861996 | 1302.3 | 0.000000 | 0.000000 | 0.000000 |

| 2 | 2022-07-03 10:12:17+00:00 | 46.238670 | 6.861501 | 1303.2 | 0.005734 | -0.017145 | 0.003903 |

| 3 | 2022-07-03 10:12:18+00:00 | 46.238636 | 6.861510 | 1303.3 | 0.004223 | -0.016833 | 0.004336 |

| 4 | 2022-07-03 10:12:19+00:00 | 46.238636 | 6.861510 | 1303.3 | 0.004223 | -0.016833 | 0.004336 |

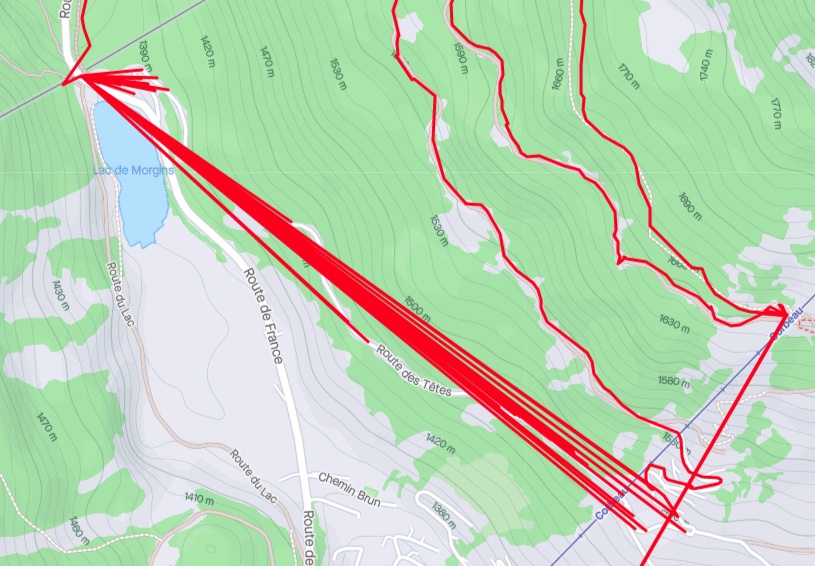

Some of the errors like the one at Pas de Morgins seem to be characterized by the track alternating between the real underlying trajectory and a single erroneous point. If we count the occurrences of the most frequent coordinates, that point at Pas de Morgins is precisely at the top of the list.

| latitude | longitude | frequency | |

|---|---|---|---|

| 0 | 46.249691 | 6.845940 | 242 |

| 1 | 46.246140 | 6.861062 | 22 |

| 2 | 46.253025 | 6.845210 | 20 |

| 3 | 46.262466 | 6.851125 | 14 |

| 4 | 46.221539 | 6.791507 | 14 |

| 5 | 46.254601 | 6.854623 | 14 |

Therefore the first obvious fix would be to exclude the most frequently repeated points and try to replace them through interpolation of the real trajectory, for instance with a Kalman filter, with the added benefit of reducing measurement noise on the rest of the trajectory.

In the version of the Kalman filter with no control input to the dynamical system, we assume that the data is generated by a latent Markov process (the state \(z_{t+1}\) at a given time step only depends on the previous step \(z_t\) and not the earlier ones) with normally distributed transitions,

\[ z_{t+1} \vert z_t \sim \mathcal{N} \left( F\, z_t + b, \ \Sigma_{tr} \right), \]

where \(F\) is a (possibly time-dependent) transition matrix, \(b\) a bias vector and \(\Sigma_{tr}\) the covariance matrix of the transition noise. We further assume that a similar Gaussian linear process generates the observations from a given latent state,

\[ x_t \vert z_t \sim \mathcal{N} \left( H\, z_t + c, \ \Sigma_{obs} \right), \]

where \(H\) is an observation matrix, \(c\) a bias term and \(\Sigma_{obs}\) the covariance of the observation process.

With these strong assumptions of normality and linear transformations, the posterior distribution of the latent states given observations can be derived through linear algebra operations. These operations are readily implemented in the tfd.LinearGaussianStateSpaceModel distribution in the TensorFlow Probability library. The forward_filter method runs a Kalman filter to compute the filtered marginal distribution \(P(z_t \vert x_{1..t})\) conditioned only on past observations, and the posterior_marginal method runs a Kalman smoother to compute the filtered marginal distribution \(P(z_t \vert x_{1..T})\) conditioned on the full history of observations, including the future ones. These algorithms are discussed in details in section 8.3 of Probabilistic Machine Learning: Advanced Topics. Since we have access to the whole GPS track, we will of course use the Kalman smoother. Conveniently, these methods also work if we condition only on a subset of the observations. Which observations should be ignored can be specified with an optional mask argument.

The first example in the official TensorFlow Probability documentation is precisely the tracking problem that interests us, where the latent space is the real position and the observation is the noisy measurement. Here we will increase the dimension of the latent space and add a velocity vector \(\mathbf{v}\) and an acceleration vector \(\mathbf{a}\) to the true position \(\mathbf{s} = (latitude, longitude, elevation)\), so that \(z = (\mathbf{s}, \mathbf{v}, \mathbf{a})\), and use transitions inspired by classical mechanics,

\[ z_{t+1} \vert z_t \sim \mathcal{N} \left( (\mathbf{s}_t + \mathbf{v}_t, \mathbf{v}_t + \mathbf{a}_t, 0), \Sigma_{tr} \right), \]

with a diagonal transition covariance matrix \(\Sigma_{tr}\) that carries much more uncertainty in its acceleration components. This kind of model is useful to estimate the velocity and acceleration solely from the position measurements (simply taking finite differences would be very inaccurate given the measurement noise), and it also incorporates knowledge from the laws of physics on how past states are going to influence future states. In a nutshell, it assumes that the forces affecting the bike and its rider are subject to random changes (I can brake, accelerate or turn, or collide with external obstacles), and my velocity and position are going to be a solution to Newton’s second law of motion (\(F = ma\)). Such a model can extrapolate the future position from the current velocity, and correct the velocity and acceleration estimations from the measured positions.

Note that to estimate the physical velocity and acceleration, it would be better to transform the spherical coordinates into cartesian coordinates, but for our purpose of error correction, it should be good enough to use the (locally normalized) spherical ones.

Code

# Specify a Kalman filter

ndims = 3

noise_std = .2

n_steps = len(route_df)

model = tfd.LinearGaussianStateSpaceModel(

num_timesteps=n_steps,

transition_matrix=(tf.linalg.diag(2*ndims*[1.] + ndims*[0.])

+ tf.pad(tf.eye(2 * ndims),

tf.constant([[0, ndims],

[ndims, 0]]))),

transition_noise=tfd.MultivariateNormalDiag(

scale_diag=tf.concat([1e-9 * tf.ones([ndims]),

1e-9 * tf.ones([ndims]),

3e-2 * tf.ones([ndims])],

axis=0)),

observation_matrix=tf.pad(tf.eye(ndims),

tf.constant([[0, 0],

[0, 2*ndims]])),

observation_noise=tfd.MultivariateNormalDiag(

scale_diag=noise_std * tf.ones([ndims])),

initial_state_prior=tfd.MultivariateNormalDiag(

scale_diag=1e-9 * tf.ones([3*ndims])))

# Collect the observations into a tensor

x = tf.convert_to_tensor(

route_df.loc[:n_steps-1, ['norm_lon', 'norm_lat', 'norm_elev']].values,

dtype=tf.float32)

# Specify a mask to ignore measurements that occur more than 10 times

maximum_accepted_frequency = 10

rejected_df = pd.DataFrame(

route_df.groupby(['latitude', 'longitude'])['time'].count()

>maximum_accepted_frequency

)

rejected_df.columns = ['masked']

frequency_mask = route_df.merge(rejected_df,

how='left',

left_on=['latitude', 'longitude'],

right_index=True)['masked']

# Run a smoothing recursion to extract posterior marginals for locations

# at previous timesteps while rejecting the repeated measurements

posterior_means, posterior_covs = model.posterior_marginals(x,

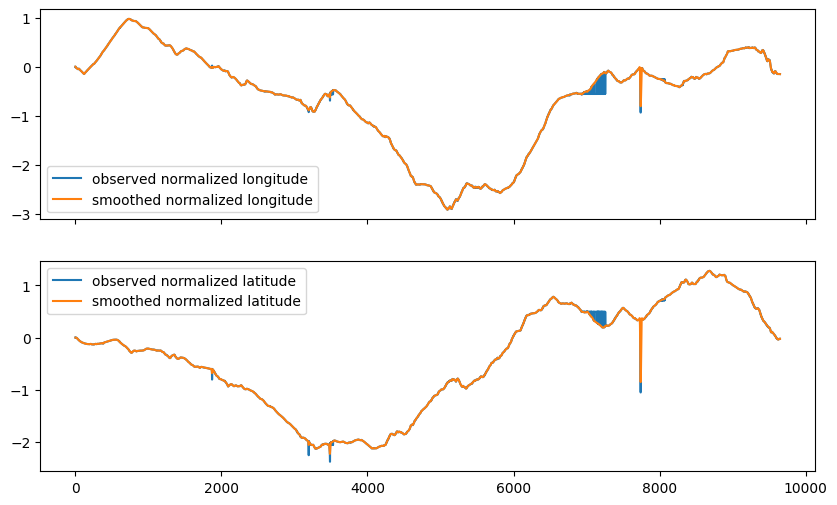

mask=frequency_mask)If we overlap the observed time series of normalized geographic coordinates with the filtered ones, it appears that the solid blocks on the observed values that correspond to the back and forth patterns do get corrected by the Kalman smoothing with masked repeated values.

The spikes caused by isolated errors are a bit attenuated by the filter, provided the observation noise scale parameter is large enough. If it is too small, extreme points are interpreted as legitimate measurements because they are unlikely to be observation errors under the model specifications. But too large a scale parameter blurs out the inferred trajectory as it cannot resolve the trail geometry.

So the trick is to run a first Kalman smoother with an observation noise scale parameter that is large enough to identify the individual outliers (while masking the repeated measurements that we already identified), followed by a second one with lower noise while masking the individual outliers in addition to the repeated measurements to infer the true trajectory. For the identification of individual outliers after the first smoothing, we can make use of the posterior marginal distributions \(\mathcal{N}(\hat\mu_t, \hat\Sigma_t)\) returned by the smoother that should “explain” the observation \(x_t\) after multiplication by the observation matrix \(H\). Concretely, the quantity

\[Q = \left(x_t - H\, \hat\mu_t\right)^T \, \left(H \, \hat\Sigma_t \, H^T \right)^{-1} \, \left(x_t - H\, \hat\mu_t\right)\]

should follow a \(\chi^2_3\) distribution under the model assumptions, so we can use a chi-squared test to pick outliers. Note that the threshold of that test has to be set fairly low to catch all outliers, because of the larger noise scale paramter of the first smoother.

Code

# Run a chi^2 test to reject outliers

threshold = 0.01

H = tf.pad(tf.eye(ndims), tf.constant([[0, 0], [0, 2*ndims]]))

S = H @ posterior_covs @ tf.transpose(H)

innovation = x - tf.linalg.matvec(H, posterior_means)

Q = tf.reduce_sum(innovation *

tf.linalg.matvec(tf.linalg.inv(S), innovation), axis=1)

chi2_score = tfd.Chi2(3).cdf(Q)

chi2_mask = chi2_score>threshold

# specify a second Kalman smoother with a lower noise parameter

noise_std_2 = .05

model2 = tfd.LinearGaussianStateSpaceModel(

num_timesteps=n_steps,

transition_matrix=(tf.linalg.diag(2*ndims*[1.] + ndims*[0.])

+ tf.pad(tf.eye(2 * ndims), tf.constant([[0, ndims],[ndims, 0]]))),

transition_noise=tfd.MultivariateNormalDiag(

scale_diag=tf.concat([1e-9 * tf.ones([ndims]),

1e-9 * tf.ones([ndims]),

3e-2 * tf.ones([ndims])], axis=0)),

observation_matrix=tf.pad(tf.eye(ndims), tf.constant([[0, 0], [0, 2*ndims]])),

observation_noise=tfd.MultivariateNormalDiag(

scale_diag=noise_std_2 * tf.ones([ndims])),

initial_state_prior=tfd.MultivariateNormalDiag(

scale_diag=1e-9 * tf.ones([3*ndims])))

# apply the smoother with the combined masks

corrected_posterior_means, corrected_posterior_covs = (

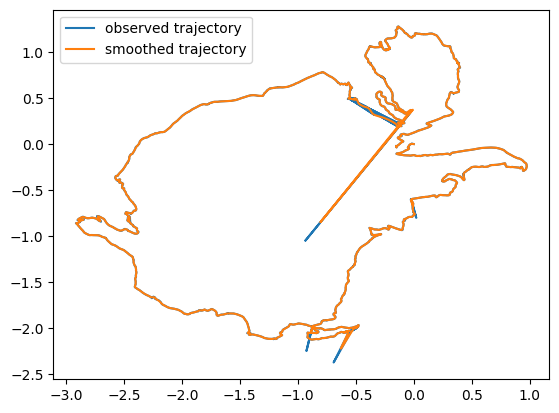

model2.posterior_marginals(x, mask=tf.math.logical_or(frequency_mask, chi2_mask)))The posterior mean of the second smoother produces a clean estimation of the real trajectory, free of any weird spike. As a last step, we can rescale it and plot it on OpenStreetMap to admire the result and think of future bike rides!

Acknowledgement

I would like to thank Maxime Baillifard for showing me his hometrails around Morgins, an area that seems to affect GPS signals like the Bermuda Triangle, Yannick Stebler for providing a rational explanation about signals bouncing on cliffs that debunked that myth, and Isaac Skog for suggesting to use a chi-squared test to reject outliers after reading a first version of this article that was using a less robust quadratic form.